Welcome to the September edition of Cloudera Fast Forward’s monthly newsletter. This month, we’re happy to share some new research! This is the first example of a new research format we’re experimenting with: more focussed and more frequent. Let us know what you think!

New research release today!

Meta-Learning

In contrast to how humans learn, deep learning algorithms need vast amounts of data and compute and may yet struggle to generalize. Humans are successful in adapting quickly because they leverage their knowledge acquired from prior experience when faced with new problems. In this webinar we will explain how meta-learning can leverage previous knowledge acquired from data to solve novel tasks quickly and more efficiently during test time.

Our report, Meta-Learning is freely available online, and accompanied by code that applies the technique to an image dataset.

Research preview: Structural time series

Our next research release is coming to your screens in October, and will examine the application of generalized additive models to time series problems using the excellent Prophet package.

Recommended reading

Our research engineers share their favourite reads of the month.

-

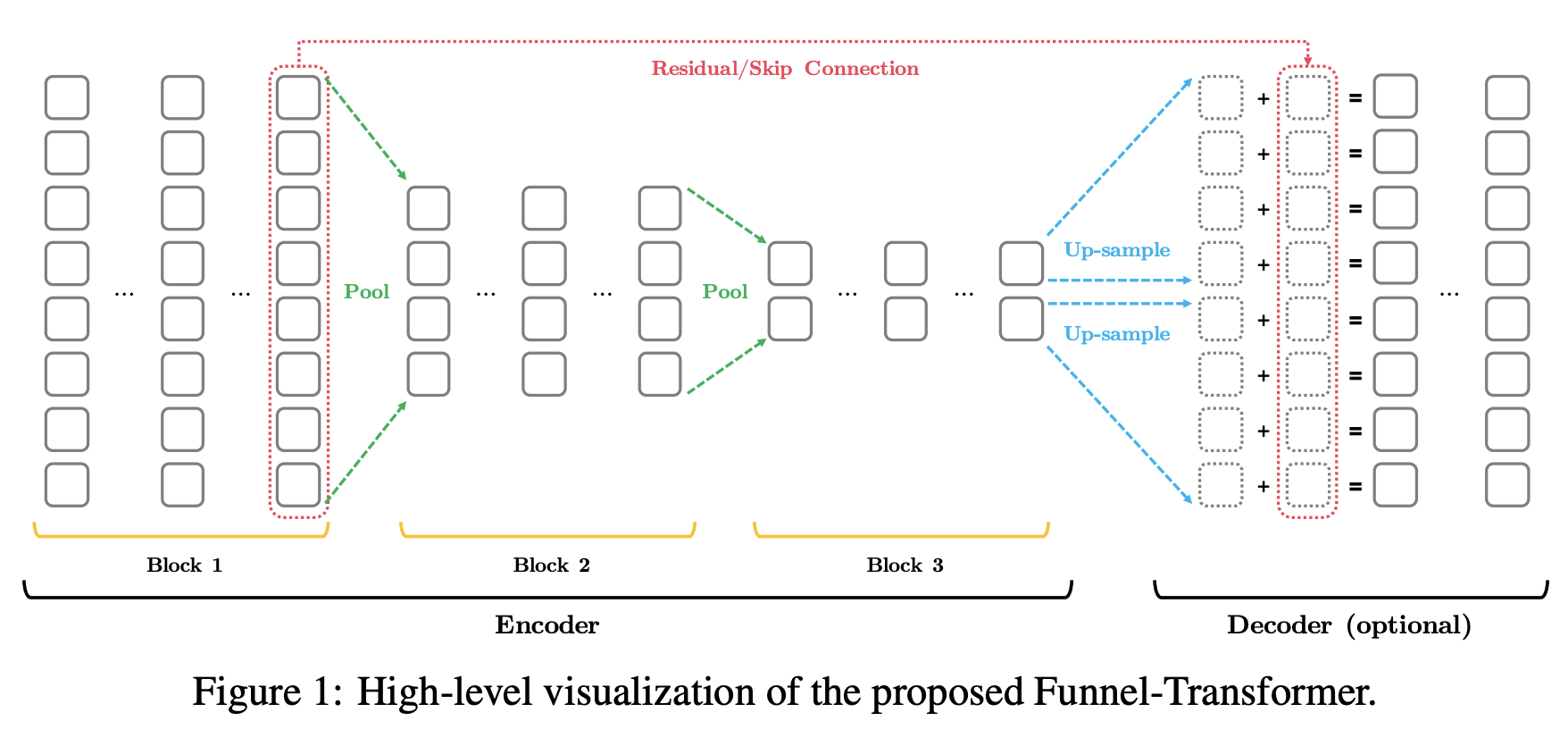

Funnel Transformer One of the rather active areas of NLP research focuses on how to harness the superior performance of transformer models but at a fraction of the computation costs. The Funnel Transformer takes a step in this direction by introducing an architecture which borrows ideas from CNNs. It uses pooling to gradually compresses the sequence of hidden states in the decoder, leading overall less FLOPs. The authors demonstrate comparable performance to much larger BERT models. - Victor

-

Deep Transformer Models for Time Series Forecasting: The Influenza Prevalence Case The transformer architecture has taken NLP by storm, so it is unsurprising to see an application to other sequential data. This paper compares a novel transformer architecture for time series forecasting against common time series methods and the state of the art influenza-like illness forecasting model. It finds that the transformer model performs similarly to state of the art. I strongly suspect this is not the last application of transformer models to time series problems we’ll see. - Chris

-

The Language Interpretability Tool: Extensible, Interactive Visualizations and Analysis for NLP Models Another contribution towards interpretable ML, the authors present the Language Interpretability Tool (LIT). LIT is designed to provide visibility and understanding to complex NLP models and tasks. With a minimal amount of code, you can create an interactive, browser-based interface and probe your NLP model with techniques like local explanations, aggregate analysis, and counterfactual generation. It’s framework agnostic so it doesn’t matter if your models are in Tensorflow, PyTorch, or something else. I’m going to try this out on my NLP workflows and I’ll let you know how it goes! — Melanie

-

MLOps: Continuous delivery and automation pipelines in machine learning

The main hurdle to realizing benefits from a machine learning solution isn’t model training. Rather, the actual challenge is building an integrated system that can continuously operate in production. This well developed article by Google discusses best practices for Machine Learning Operations (MLOps) - the engineering culture and process that aims to unify ML system development and ML system operations through the application of standard DevOps principles. By advocating for automation and monitoring at all steps of ML system construction, teams can achieve sustainable ML solutions. — Andrew

-

Learning to summarize with human feedback

Language models are great, but many are very large. This paper looks into leveraging reinforcement learning and human feedback to train language models that are not only smaller, but also better at summarization. Per the blog post: “Our core method consists of four steps: training an initial summarization model, assembling a dataset of human comparisons between summaries, training a reward model to predict the human-preferred summary, and then fine-tuning our summarization models with RL to get a high reward.” The approach is super interesting, and conceptually reminds me of active learning! — Shioulin

Events

- Our first ever Research Roundup webinar is in preparation for late October. We’ll cover both the just-released Meta-Learning research and our upcoming Structural Time Series report. Watch our social accounts and your email for more info soon!