Welcome to the May Cloudera Fast Forward Labs newsletter. This month, we have some new research, a livestream you’re invited to, and some recommended reading.

New research: Session-Based Recommendations

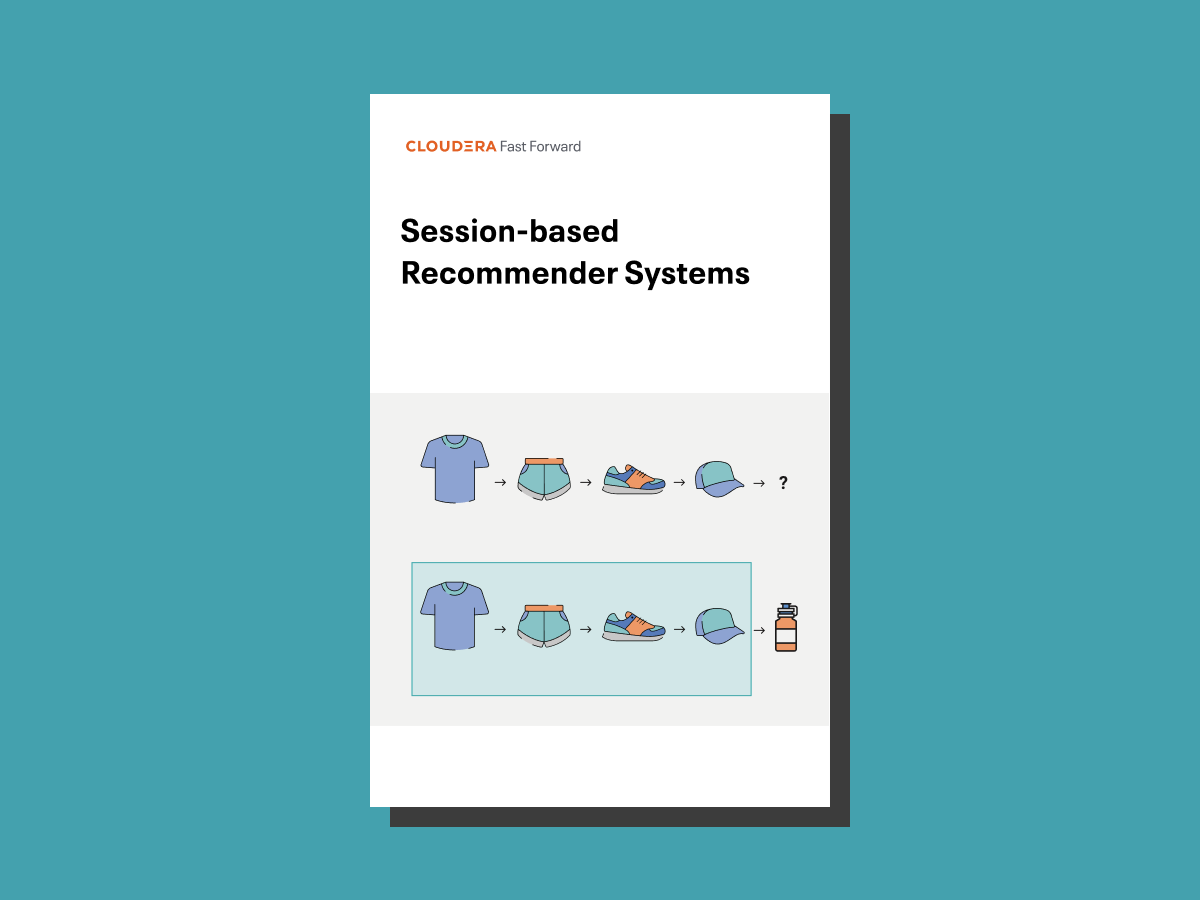

Recommendation systems have become a cornerstone of modern life, spanning sectors that include online retail, music and video streaming, and even content publishing. These systems help us navigate the sheer volume of content on the internet, allowing us to discover what’s interesting or important to us. A key trend over the past few years has been session-based recommendation algorithms that provide recommendations solely based on a user’s interactions in an ongoing session, and which do not require the existence of user profiles or their entire historical preferences. Our Session-based recommender systems report covers:

- the problem space and applications

- a simple, yet, powerful, NLP-based approach (word2vec) to recommend a next item to a user

- experimental findings when employing this approach

- and many of the considerations necessary to building thoughtful, information-driven recommender systems

Follow the links in the report to find code snippets so you can try it for yourself!

Fast Forward Live!

Join us live on June 2 at 9am PST for our third ever live stream. We’ll chat about our latest research output and take live questions. You can tune in here.

You can also still catch a replay of our previous livestreams: Few-Shot Text Classification and Representation Learning for Software Engineers.

Meta Learning

Also in us-speaking-about-things, our Research engineer Nisha will be speaking about Meta-Learning at OSDC: Europe on June 10th, 3:10 PM BST.

Recommended reading

Some of our team share their favourite reads of the month:

-

How Many Data Points is a Prompt Worth? Pretrained language models are becoming a staple of the modern NLP stack. Typically, these models are “fine-tuned” for a downstream task (e.g., classification, question answering) through the use of a “head” model: a classification layer that takes in pretrained representations and predicts an output. However, an alternative paradigm is gaining traction: prompting. This method adapts the pretrained language model directly by training it to recognize structured prompts designed to coax the model to produce a textual response corresponding to an output class (T5 and GPT3 use this). These prompts can provide additional information to the language model, which could make them especially advantageous in low-data regimes.

This paper examines the claim that prompting requires fewer supervised data points to learn a given downstream task. They perform a slew of experiments on many standardized NLP tasks and quantify the average data advantage, or how many data points a prompt is worth. They find that, in nearly all cases, prompting requires fewer data points to learn a downstream task, and sometimes substantially so! This is exciting because it indicates that large, pretrained language models can be better adapted to NLP tasks in low-data regimes. However, this comes with a caveat because designing custom prompts can inject human bias into language models, which already suffer biases of their own! Proceed with care. - Melanie

-

Collider bias undermines our understanding of COVID-19 disease risk and severity Causal graphs give us a unified means of understanding many common (and dangerous when ignored) statistical effects, like Simpson’s paradox and selection bias. This paper analyses risk factors for COVID-19 infection through the lens of causal graphs, especially focussing on collider bias. If not properly handled, collider bias distorts associations between variables (selection bias is itself a form of collider bias). Armchair statistics has understandably grown in precedence during the pandemic, and this paper also serves to introduce the causal effects it discusses using the now-accessible COVID statistics. - Chris

-

Representing Numbers in NLP: a Survey and a Vision Reasoning with quantities, counts, and numerical representations is critical to understanding natural language. In both the original paper and a 2021 study, researchers demonstrated that despite the effectiveness of language modeling at scale, the 175B parameter behemoth that is GPT-3 struggles with mathematical concepts in language. This paper contextualizes recent work and challenges on numeracy in NLP by proposing a taxonomy of tasks in the space and offering a vision for future research. - Andrew