January 2022

Welcome to the January edition of the Cloudera Fast Forward Labs newsletter. This month we provide a glimpse of our next research topic, talk about the uniqueness of snowflakes, and share what we’ve been reading this month.

It’s the start of a new year and the CFFL team is shaking off the holiday fog and ramping up with some awesome new research. While we don’t have results to share yet, we thought we could give a sneak peek at what we’re digging into — work that we’ll share more fully in the coming months!

Research Preview: Text Style Transfer

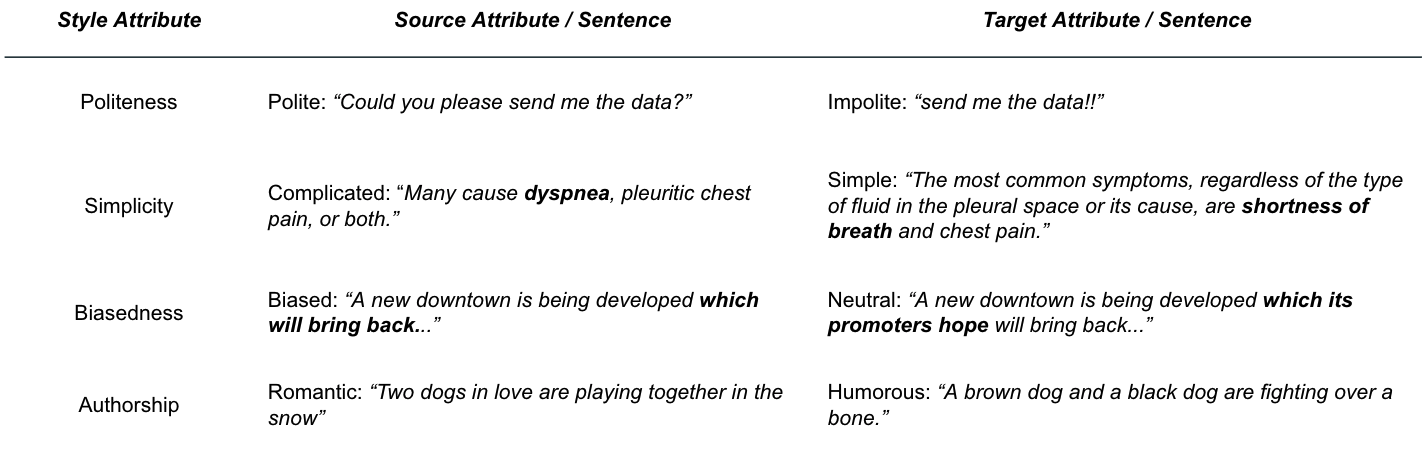

Text style transfer (TST) is a natural language generation task with a long history in the field of natural language processing. This task has recently re-gained attention in academic circles thanks to the advancements in deep learning. The goal of TST is to automatically control the style attributes of text while preserving the content. Linguistic style is an important consideration for making NLP more user-centric that, historically, has been largely ignored by the colossal language models out there today. But, as humans, we know that language is situational — not only is the context of the words important, but the style of text itself depends heavily on the user, environment, or scenario.

Above are some examples of how the same content can be portrayed with different styles. The ability to automatically convert between various styles has a multitude of applications including chatbots with consistent personae (e.g., empathetic rather than emotionless), artistic writing aids, automatic text simplification, neutralizing online text, and many others.

Stay tuned for more on this fascinating NLP task!

Guest blog: The Most Unique Snowflake

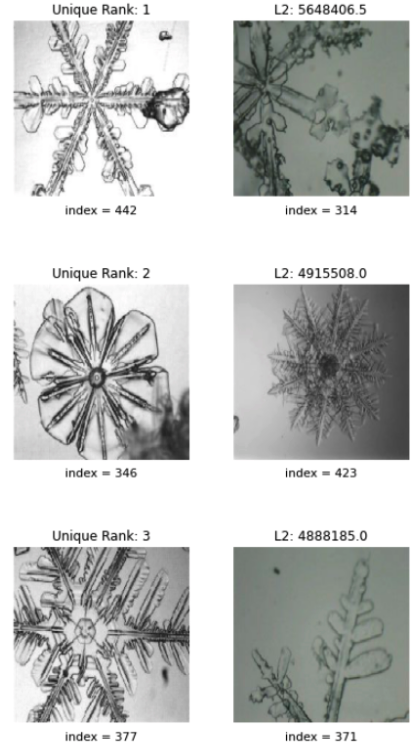

Our fabulous colleague, Jake Bengston, is a marketing guru with the heart (and head) of a data scientist! He recently released this article on the Cloudera blog detailing how he modified one of CFFL’s applied machine learning prototypes built on the principles discussed in our Deep Learning for Image Analysis research report. You don’t want to miss this one because it has code examples!

Every snowflake is unique, but some must be less unique than others. In this blog we demonstrate how to repurpose an existing Applied ML Prototype (Deep Learning for Image Analysis) to create an ML model capable of finding the most unique snowflake.

Fast Forward Live!

Check out replays of livestreams covering some of our research from last year.

Deep Learning for Automatic Offline Signature Verification

Session-based Recommender Systems

Representation Learning for Software Engineers

Recommended reading

Our research engineers share their favorite reads of the month.

SlowFast Networks for Video Recognition

Studies show that some retinal cells (magnoceullular) in primates are particularly well suited for detecting fast temporal changes in the observed scene, but not spatial detail or color. Other cells (parvocellular) are the opposite and detect fine grained spatial details and color, but not fast motion. Those biological observations motivated the Slow Fast Networks (SFNs) family of architectures for video understanding. SFNs have two pathways. But in contrast with two-stream architectures that take video and optical flow as input, SFNs take only video, sampled at two different rates:

- The Slow pathway samples at low rate (say 2 frames per second), and, like object detectors, has layers with many channels, intended to capture mostly spatial semantics – the kind of object. It also captures slow motion by using 3D convolutions in later layers, where the field of view is large enough to capture large objects.

- The Fast pathway samples the video at a high rate (say 16 frames per second), and is intended for capturing temporal semantics – the kind of action; it has fewer channels in order to compensate for the increased compute derived from the high frame rate.

SFN achieved state-of-the-art results in 2019 and is an interesting read because of the time-versus-space insights relevant to video understanding. — Daniel.

Non-local operations (NLOs) are introduced in this article as a means to enhance convolutional neural networks for computer vision tasks. An insightful discussion of how attention (the mechanism underlying Transformers) is but one of many possible NLOs is presented. For the specific tasks considered (video action recognition, object detection and instance segmentation), NLOs are shown to be complementary to convolutions: NLOs are particularly well suited for long-range dependencies, while convolutions are good at capturing short range interactions. Both types of operations build representations by performing weighted averages of values on a feature map. But whereas convolutions use fixed weights and are typically applied locally, the weights of NLOs depend on the feature values themselves, and are applied not only locally but at a distance, too.

The proposed architectures are built by taking an image classification network (Inception), inflating some of the 2D convolutions to 3D, and inserting non-local operator blocks at different locations. The more non-local blocks, the higher the performance. Experiments show that adding more convolutions does not produce the same gains as adding non-local layers, which are therefore argued to be a crucial element in the architecture. — Daniel.

Real-time machine learning: challenges and solutions

Not all machine learning problems demand real-time solutions — but for those that do, its crucial to understand the challenges that stand in the way. In this article, Chip Huyen outlines the problem space and solution patterns that leading ML teams have adopted for online prediction and continual learning. Chip proposes an evolution of ML system maturity and breaks down the stages involved. In the end, moving from batch prediction to real-time is largely an infrastructure problem that requires organizational alignment and cooperation between the data science, platform, and engineering teams. An interesting read for anyone curious about scaling online ML systems. — Andrew

WebGPT: Improving the factual accuracy of language models through web browsing

In this blog post, researchers from OpenAI provide a high-level overview of their recent paper on a new model called WebGPT, which has been trained to perform long-form question answering by, well, googling and citing its work! WebGPT is a GPT3-based model that is provided an open-ended question and a summary of a browser state. The model must execute search, find in page, and quote commands to collect passages from web pages and then synthesize that information into a long-form answer to the question, as well as cite the web pages it ultimately used.

Finally, a model that can do my internet surfing for me!

However, as the authors are keenly aware, GPT3 (and other text generating) models are prone to “hallucinating” information: generating text that, at first blush, may seem reasonable but, upon closer inspection, is neither consistent nor factually accurate. To address this the authors used human feedback to improve both the model’s helpfulness and accuracy. Additionally, human checkers also verified the model’s accuracy by determining if WebGPT’s answer was supported by reliable sources. But challenges remain — what determines a source’s reliability? Furthermore, the very act of providing citations can lend the model an air of authority when, in reality, WebGPT still makes basic errors. Even with these challenges, WebGPT remains an impressive piece of engineering and an interesting blog (and paper!) to read. — Melanie