September 2022

Welcome to the September edition of the Cloudera Fast Forward Labs newsletter. This month we’re talking about ethics and we have all kinds of goodies to share including the final installment of our Text Style Transfer series and a couple of offerings from our newest research engineer. Throw in some choice must-reads and an ASR demo, and you’ve got yourself an action-packed newsletter!

New Research!

Ethical Considerations When Designing an NLG System

In the final post of our blog series on Text Style Transfer, we discuss some ethical considerations when working with natural language generation systems, and describe the design of our prototype application: Exploring Intelligent Writing Assistance.

Here we emphasize the imperative for a human-in-the-loop user experience as a risk mitigation strategy when designing NLG tools. We believe text style transfer has the potential to empower writers to better express themselves, but not by blindly generating text. Rather, generative models, in conjunction with interpretability methods, should be combined to help writers understand the nuances of linguistic style and suggest stylistic edits that may improve their writing.

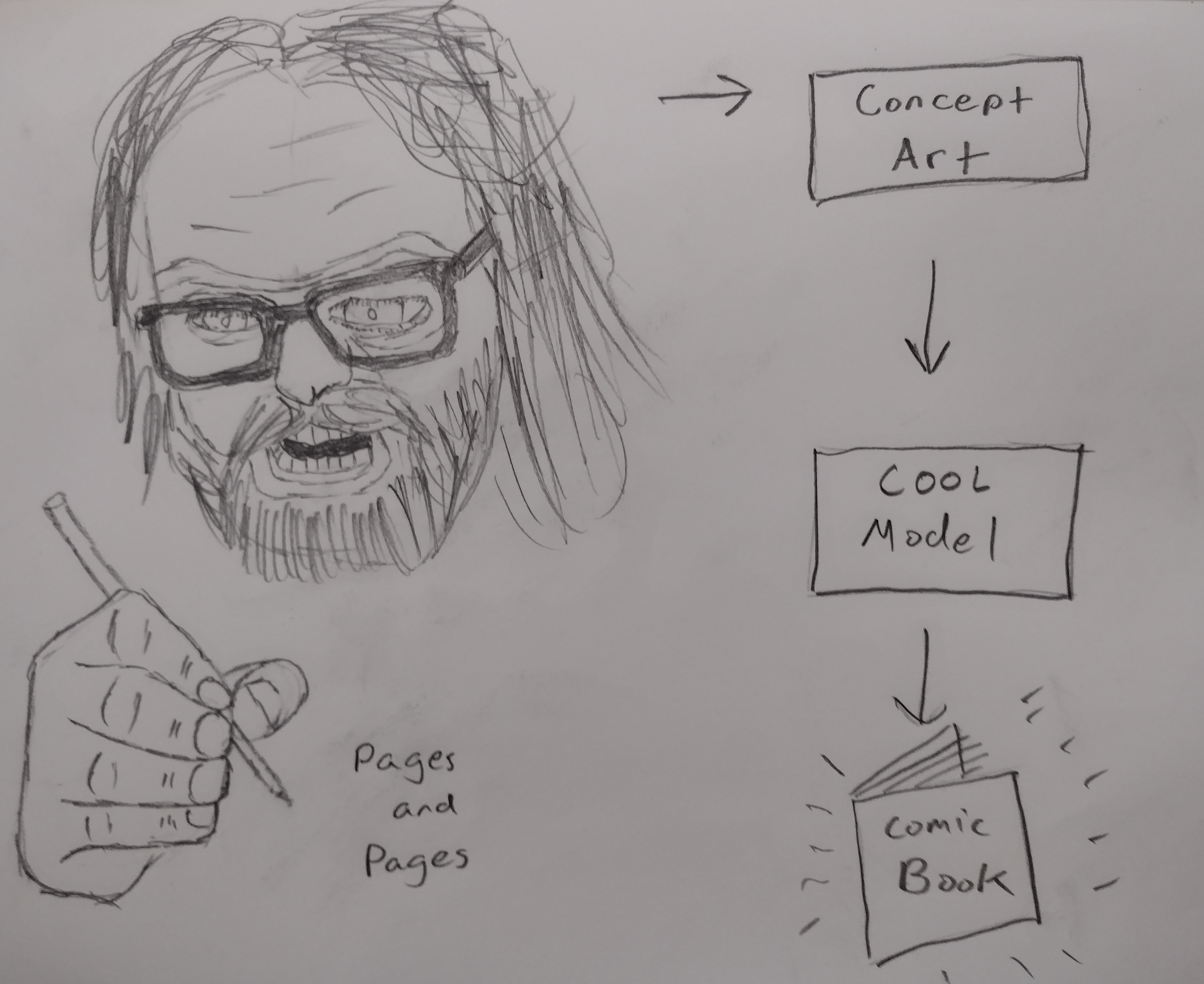

Thought experiment: Human-centric machine learning for comic book creation

Our new research engineer, Mike Gallaspy, lightheartedly speculates on using machine learning techniques for creating comic book art in his first blog post. Take a peek and enjoy some of his hand-drawn illustrations!

Ethics Sheet for AI-assisted Comic Book Art Generation

And in keeping with the ethics theme this month, a companion piece to the blog above. This article is our simplified take on an ethics sheet for the task of AI-assisted comic book art generation, inspired by “Ethics Sheets for AI Tasks.” In it, we take a look at some of the ethical considerations involved in the creation and utilization of a state-of-the-art AI system for generative art creation.

Fast Forward Live!

Check out replays of livestreams covering some of our research from last year.

Deep Learning for Automatic Offline Signature Verification

Session-based Recommender Systems

Representation Learning for Software Engineers

Recommended Reading

CFFLers share their favorite reads of the month.

3D Human Shape Style Transfer

I am interested in style transfer. My first blog post speculates about a style transfer use case, and I believe there are many interesting applications yet to be discovered in that problem domain. The very concepts of “style” and “content” that underlie the task of style transfer are hard to pin down in a mathematically precise way, but are very intuitive to humans. I find this dichotomy fascinating.

The paper I’m recommending, Regateiro and Boyer, “3D Human Shape Style Transfer,” examines the use of style transfer to render human characters in novel poses, without an explicit pose or shape model. This application stimulates my imagination by suggesting new creative applications. For example, if one could create some character art by hand and learn a 3D model of that character by some method,* then Regateiro and Boyer’s method could allow you to generate plausible renderings of novel poses by reference. Given the rise in prominence of models like CLIP, a “reference” could conceivably be another image, a text prompt, or both.

*My honorable mention paper for this month speaks to learning 3D models: Kanazawa et al., “End-to-End Recovery of Human Shape and Pose.” — Mike

Boosting Monocular Depth Estimation Models to High-Resolution via Content-Adaptive Multi-Resolution Merging

Despite the intimidating title (it’s a mouthful, I know), this paper is a great read. Monocular (or single-image) depth estimation aims to extract the structure of a scene from a single image — an important task for applications like 3D scene reconstruction, autonomous driving, and augmented reality. While deep learning has proven effective at the task, the inferred depth maps are often of low resolution and therefore lack fine-grained details which limits their usefulness in real world applications.

This paper introduces a novel technique that makes use of depth predictions from pre-trained models by varying the input image resolution, and combines the resulting depth maps. By merging high and low resolution estimations, the authors are able to generate multi-megapixel depth maps with a high level of detail using purely pre-trained models. — Andrew

Introducing Whisper

OpenAI have done it again! Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. Whisper is unique because this dataset is one of the largest and most diverse ever used. Furthermore, Whisper was not fine-tuned to any specific ASR task or portion of the dataset. This means that it does not beat models that have been specialized for certain competitive ASR benchmarks. However, Whisper’s zero-shot performance across many diverse datasets and tasks is far more robust and makes 50% fewer errors than these more specialized models.

Whisper is multilingual and can perform all kinds of tasks:

In addition to their blog, OpenAI have published a paper and open-sourced their code so you can try Whisper for yourself! You can access all these great artifacts through their blog. I created a bare-bones WhisperDemo Colab notebook (using their Colab example as a starting point) that allows you to record your own audio and transcribe it with Whisper. Enjoy! – Melanie