Jan 10, 2017 · post

Five 2016 Trends We Expect to Come to Fruition in 2017

The start of a new year is an excellent occasion for audacious extrapolation. Based on 2016 developments, what do we expect for 2017?

This blog post covers five prominent trends: Deep Learning Beyond Cats, Chat Bots - Take Two, All the News In The World - Turning Text Into Action, The Proliferation of Data Roles, and _What Are You Doing to My Data? _

(1) Deep Learning Beyond Cats

In 2012, Google found cats on the internet using deep neural networks. With a strange sense of nostalgia, the post reminds us how far we have come in only four years, with more nuanced reporting as well as technical progress. The 2012 paper predicted the findings could be useful in the development of speech and image recognition software, including translation services. In 2016, Google’s WaveNet can generate human speech, General Adversarial Networks (GANs), Plug & Play Generative Networks, and PixelCNN can generate images of (almost) naturalistic scenes including animals and objects, and machine translation has improved significantly. Welcome to the future!

In 2016, we saw neural networks combined with reinforcement learning (i.e., deep reinforcement learning) beat the reigning champion Lee Sedol in Go (the battle continues online) and solve a real problem; deep reinforcement learning significantly reduces Google’s energy consumption. The combination of neural networks with probabilistic programming (i.e., Bayesian Deep Learning) and symbolic reasoning proved (almost) equally powerful. We saw significant advances in neural network architecture, for example, the addition of long-term memory (Neural Turing Machines) which adds a capacity resembling “common sense” to neural networks and may help us build (more) sophisticated dialogue agents.

In 2017, enabled by open-sourced software like Google’s TensorFlow released in late 2015, Theano, and Keras, neural networks will find (more) applications in industry (e.g., recommender systems), but widespread adoption won’t come easily. Algorithms are good at playing games like Go because games easily allow to generate the amount of data needed to train these advanced, data-hungry algorithms. The availability of data, or lack thereof, is a real bottleneck. Efforts to use pre-trained models for novel tasks using transfer learning (i.e., using what you have learned on one task to solve another, novel task) will mature and unlock a bigger class of use cases.

Parallel work on deep neural network architecture will enhance said architecture, deepen our understanding, and hopefully help us develop principled approaches for choosing the right architecture (there are many) for tasks beyond “CNNs are good for translation invariance and RNNs for sequences”.

In 2017, neural networks will go beyond game playing and deliver on their promise to industry.

(2) Chat Bots - Take Two

2016 had been declared by many the year of the bots, and it wasn’t. The narrative was loud but the results, more often than not, disappointing. Why?

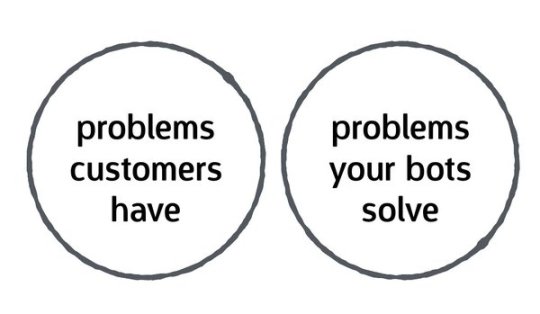

Amongst the many reasons; lack of avenues for distribution, lack of enabling technologies, and the tendency to treat bots as a purely technical not product or design challenge. Through hard work and often failure, the best driver of future success, the bot community learned some valuable lessons in 2016. Bots can be brand ambassadors (e.g., Casper’s Insomnobot-3000) or marketing tools (e.g., Call of Duty’s Lt Reyes). Bots are good for tasks with clear objectives (e.g., scheduling a meeting) while exploration, especially if the content can be visualized, is better left to apps (you can, of course, squeeze it into a chatbot solution). Facebook’s messenger platform added an avenue for distribution; Google (Home, Allo) may follow while Apple (Siri) will probably stay closed. Facebook’s Wit.ai adds technology to enable developers to build bots, at re:Invent 2016, Amazon unveiled Lex.

After excitement and inflated expectations in 2016, we will see useful, goal-oriented, narrow-domain chatbots with use case appropriate personality supported by human agents when the bot’s intent recognition fails or when it wrangles a conversation. We will see more sophisticated intent recognition, graceful error handling, and more variety in the largely human-written template responses while ongoing research into end-to-end dialogue systems promises more sophisticated chatbots in the years to come. After the hype, a small, committed core remains and they will deliver useful chatbots in 2017.

Who wins our “The Weirdest Bot Of 2016” award? The Invisible Boyfriend.

(3) All the News In the World - Turning Text into Action

At the beginning there was the number; algorithms work on numerical data. Traditionally, natural language was difficult to turn into numbers that capture the meaning of words. Conventional bag-of-word approaches, useful in practice, fail to use syntactic information and fail to understand that “great” and “awesome” or Cassius Clay and Muhammad Ali are related concepts.

In 2013, Tomas Mikolov proposed a fast and efficient way to train word embeddings. A word embedding is a numerical representation of a word, say “great”, that is learned by studying the context in which the “great” tends to appear. The word embedding captures the meaning of “great” in the sense that “great” and “awesome” will be close to one another in the multi-dimensional word embedding space, the algorithm learned they are related. Alternatives like GloVe, word2vec for documents (i.e., doc2vec), and underlying methods like skip-gram and skip-thought further improved our ability to turn text into numbers and opened up natural language to machine learning and artificial intelligence.

In 2016, fastText allowed us to deal with out-of-vocab words (words the language model was not originally trained on) and SyntaxNet enhances our ability to not only encode the meaning of words but to parse the syntactic structure of sentences. Powerful, open-source natural language processing tool kits like spaCy allow data scientists and machine learning engineers without deep expertise in natural language processing to get started. FastText? Just pip install! Fuelled by this progress in the field, we saw a quiet but strong trend in industry towards utilizing these new powerful natural language processing tools to build large-scale industry applications that turn 6.6M news articles into a numerical indicator for banking distress or use 3M news articles to assess systemic risk in the European banking system. Algorithms will help us not only to make sense of the information in the world, they will help write content, too, and of course they will help bring our chit chatty chat bots to live.

In 2017, we will expect more data products built on top of vast amounts of news data especially data products that condense information into small, meaningful, actionable insights. Our world has become overwhelming, there is too much content. Algorithms can help! Somewhat ironically, we will also be using machines to create more content. A battle of machines.

In a world shaken by “fake news”, of course, one may regard these innovations with suspicion. As new technology enters the mainstream there is always hesitancy, but the critics are right. How do we know the compression is not biased? How do we train people to evaluate the trustworthiness of the information they consume especially when it has been condensed and computer generated? How do we fix the incentive problem of the news industry, distribution platforms like Facebook do not incentivise for deep, thoughtful writing; they monetize a few seconds of attention and are likely to feed existing biases, not all challenges are technical but should concern technically minded people.

The best AI writer of the year goes to? Benjamin, a recurrent neural network that wrote the movie script Sunspring.

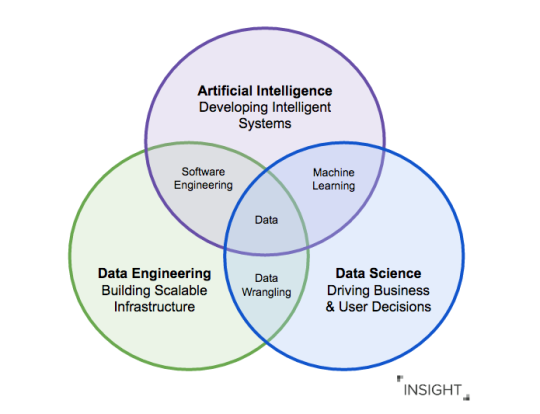

(4) The Proliferation Of Data Roles

Remember when data scientist was branded the sexiest job of the 21st century? How about machine learning engineer? AI, deep learning, or NLP specialist? As a discipline, data science is maturing. Organizations have increasingly recognized the value of data science to their business, entire companies are based on AI products leveraging the power of deep learning for image recognition (e.g., Clarifai) or offering natural language generation solutions (e.g., Narrative Science). With success comes a greater recognition, appreciation of differences, and specialization. What’s more, the sheer complexity of new, emerging algorithms requires deep expertise. 2017 will see a proliferation of data roles.

The opportunity to specialize allows data people to focus on what they are good at and enjoy, great. But there will be growing pains. It takes time to understand the meaning of new job titles; companies will be advertising data science roles when they want machine learning engineers and vice versa. Hype combined with a fierce battle over talent will lead to an overabundance of “trendy” roles blurring useful differences. As a community, we will have to clarify what the new roles mean (and we’ll have to hold ourselves accountable when hiring hits a rough patch).

We will have to figure out processes for data scientists, machine learning engineers, and deep learning/AI/NLP specialists to work together productively which will affect adjacent roles. Andrew Ng, Chief Scientist at Baidu, argues for the new role of AI Product Manager who sets expectations by providing data folks with the test set (i.e., the data an already trained algorithm should perform well on). We may need transitional roles like the Chief AI Officer to guide companies in recognizing and leveraging the power of emerging algorithms.

2017 will be an exciting year for teams to experiment, but there will be battle scars.

(5) What Are You Doing To My Data?

By developing models to guide law enforcement, models to predict recidivism, models to predict job performance based on Facebook profiles, data scientists are playing high stakes games with other people’s lives. Models make mistakes; a perfectly qualified and capable candidates may not get her dream job. Data is biased; word embeddings (mentioned above) encode the meaning of words through the context in which they are used, allow simple analogies, and, trained on Google News articles, reveal gender stereotypes—”man is to programmer as woman is to homemaker”. Faulty reward functions can cause agents to go haywire. Models are powerful tools. Caution.

In 2016, Cathy O’Neill published Weapons of Math Destruction on the potential harm of algorithms which got significant attention (e.g., Scientific American, NPR). FATML, a conference on Fairness, Accountability, and Transparency in Machine Learning had record attendance. The EU issued new regulation including “the right to be forgotten”, giving individuals control over their data, and restricts the use of automated, individual decision-making especially if decisions the algorithm makes cannot be explained to the individual. Problematically, automated, individual decision-making is what neural networks do and their inner workings are hard to explain.

2017 will see companies grappling with the consequences of this “right to an explanation” which Oxford researchers have started to explore. In 2017, we may come to a refined understanding of what we mean when we say: “a model is interpretable”. Human decisions are interpretable in some sense, we can provide explanations for our decisions, but not others, we do not (yet) understand the brain dynamics underlying (complex) decisions. We will make progress on algorithms that help us understand model behavior and exercise the much needed caution when we build predictive models areas like healthcare, education, and law enforcement.

In 2017, let’s commit to responsible data science and machine learning.

– Friederike

Many thanks to Jeremy Karnowski for helpful comments.