Mar 25, 2016 · post

H.P. Luhn and the Heuristic Value of Simplicity

The Fast Forward Labs team is putting final touches on our Summarization research, which explains approaches to making text quantifiable and computable. Stay tuned for a series of resources on the topic, including an online talk May 24 where we’ll cover technical details and survey use cases for financial services, media, and professional services with Agolo. Sign up here!

In writing our reports, we try not only to inform readers about the libraries, math, and techniques they can use to put a system into production today, but also the lessons they can learn from historical approaches to a given topic. Turning a retrospective eye towards past work can be particularly helpful when using an algorithm like a recurrent neural network. That’s because neural networks are notoriously hard to interpret: feature engineering is left to the algorithm, and involves a complex interplay between the weight of individual computing nodes and the connections that link them together. In the face of this complexity, it can be helpful to keep former, more simple techniques in mind as a heuristic guide - or model - to shape our intuition about how contemporary algorithms work.

For example, we found that H.P. Luhn’s 1958 paper The Automatic Creation of Literary Abstracts provided a simple heuristic to help wrap our heads around the basic steps that go into probabilistic models for summarizing text. (For those interested in history, Luhn also wrote a paper about business intelligence in 1958 that feels like it could have been written today, as it highlights the growing need to automate information retrieval to manage an unwieldy amount of information.) Our design lead, Grant Custer, designed a prototype you can play with to walk through Luhn’s method.

Here’s the link to access the live demo. Feel free to use the suggested text, or to play around your own (and share results on Twitter!).

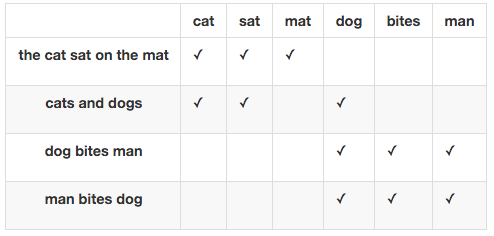

Luhn’s algorithm begins by transforming the content of sentences into a mathematical expression, or vector. He uses a “bag of words” model, which ignores filler words like “the” or “and”, and counts the number of times remaining words appear in each sentence.

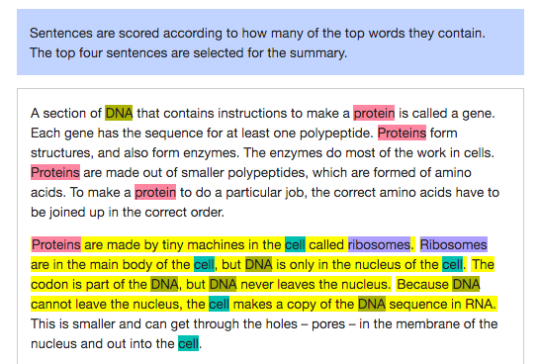

Luhn’s intuition was that a word that appears many times in a document is important to the document’s meaning. Under this assumption, a sentence that contains many of the words that appear many times in the overall document is itself highly representative of that document. In our demo, if a document’s most significant words are protein (appears 8 times) and DNA (appears 7 times), then this implies that the sentence “Proteins are made by tiny machines in the cell called ribosomes” is a useful one to extract in the summary. Once this sentence scoring is complete, the last step is simply to select those sentences with the highest overall rankings.

Luhn himself notes that his method for determining the relative significance of sentences “gives no consideration to the meaning of words or the arguments expressed by word combinations.” It is, rather, a “probabilistic one” based on counting. The algorithm does not select the sentence about ribosomes given its understanding of the importance ribosomes have to the topic in question; it selects that sentence because it densely packs together words that appear frequently across the longer document.

The contemporary approaches we study in our upcoming report build upon this basic theme. One approach, topic modeling using Latent Dirichlet Allocation (LDA), groups together words that co-occur into mathematical expressions called topics and then represents documents as a short vector of different topics weights (e.g., 50% words from the the protein topic, 30% words from the DNA topic, and 20% from the gene topic). While the mathematical models that determine these topics are much more complex than simply counting a bag of words, LDA borrows Luhn’s basic insight: that we can quantify semantic meaning as the relative distribution of like items in a data set.

Stay tuned for more exciting language processing and deep learning resources throughout the spring!

- Kathryn