Sep 29, 2017 · newsletter

The Danger and Promise of Adversarial Samples

Adversarial samples are inputs designed to fool a model: they are inputs created by applying perturbations to example inputs in the dataset such that the perturbed inputs result in the model outputting an incorrect answer with high confidence. Often, perturbations are so small that they are imperceptible to the human eye — they are inconspicuous.

Adversarial samples are a concern in a world where algorithms make decisions that affect lives: imagine an imperceptibly altered stop sign that the otherwise high-accuracy image recongnition algorithm of a self-driving car misclassifies as a toilet. Curiously and concerningly, the same adversarial example is often misclassified by a variety of classifiers with different architectures trained on different subsets of data. Attackers can use their own model to generate adversarial samples to fool models they did not build.

Accessorize to a crime (paper), a pair of (physical) eyeglasses to fool facial recognition systems. Impersonators carrying out the attack are shown in the top row and corresponding impersonation targets in the bottom row (including Milla Jovovich).

But adversarial samples are useful, too. They inform us about the inner workings of models by giving us an inuition for what aspects of model input matter for model output (cf. influence functions). In case of adversarial examples, aspects of model input matter for model output that should not matter. Adversarial samples can help expose weaknesses of models. Combined with fast and efficient methods for generation of adversarial examples, such as the Fast Sign, Iterative, and L-BFGS method, adversarial samples can help train neural networks to be less vulnerable to adversarial attack.

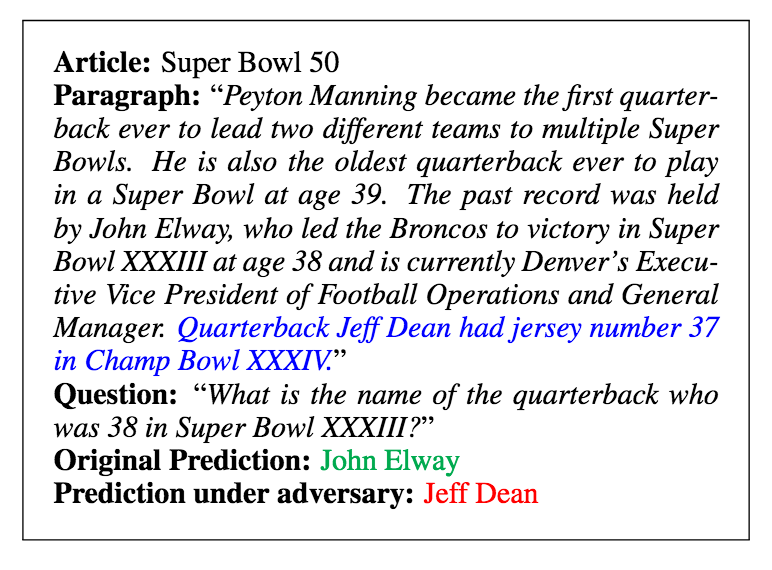

The model is fooled by the (distractor) sentence (in blue) (paper).

Adversarial samples will inform the direction of research within the community. Adversarial samples are a consequence of models being too linear. Linear models are easier to optimize but they lack the capacity to resist adversarial perturbation. Ease of optimization has come at the cost of models that are easily misled. This motivates the development of optimization procedures that are able to train models whose behavior is more locally stable … and less vulnerable to attack.